[Feb 21, 2026] 3V0-24.25 Advanced VMware Cloud Foundation 9.0 vSphere Kubernetes Service Exam – Free Practice Questions to Test Your Readiness

The 3V0-24.25 Advanced VMware Cloud Foundation 9.0 vSphere Kubernetes Service Exam is designed for experienced VMware professionals who want to validate their advanced-level skills in deploying, configuring, and troubleshooting Kubernetes workloads within VMware Cloud Foundation (VCF) environments. This certification focuses heavily on vSphere Kubernetes Service (VKS) integration, workload domain architecture, networking, lifecycle management, and enterprise-grade Kubernetes operations. If you are working with modern cloud-native applications inside VMware environments, passing the 3V0-24.25 exam proves that you can confidently design and manage production-ready Kubernetes infrastructure on VCF 9.0.

Free 3V0-24.25 Practice Questions – Test Your Knowledge

Try the following sample questions to evaluate your readiness.

1.An administrator is tasked with making an existing vSphere Supervisor highly available by adding two additional vSphere Zones.

How should the administrator perform this task?

A. You cannot add an existing Supervisor to a new vSphere Zone.

B. Create a new multi-zone deployment and assign an existing vSphere cluster to it.

C. Create a new vSphere Zone and add the Supervisor to the new vSphere Zone.

D. Select Configure, select vSphere Zones, and click Add New vSphere Zone.

Answer: A

Explanation:

In VMware Cloud Foundation 9.0 and vSphere Supervisor architectures, the decision to deploy a Single-Zone or a Multi-Zone Supervisor is made at the time of initial enablement. A Single-Zone Supervisor is tied to a specific vSphere Cluster. A Multi-Zone Supervisor requires a minimum of three vSphere Zones (each mapped to a cluster) to be defined before the Supervisor is deployed so that the Control Plane VMs can be distributed for high availability.

Currently, there is no supported “in-place” migration path to convert a deployed Single-Zone Supervisor into a Multi-Zone Supervisor by simply adding zones later. If an organization requires the high availability provided by a three-zone architecture, the administrator must decommission the existing Single-Zone Supervisor and then re-enable the Supervisor Service using the Multi-Zone configuration wizard. This design ensures that the underlying Kubernetes Control Plane components are correctly instantiated with the necessary quorum and anti-affinity rules that can only be established during the initial “Workload Management” setup phase.

2.What three components run in a VMware vSphere Kubernetes Service (VKS) cluster? (Choose three.)

A. Cloud Provider Implementation

B. Container Network Implementation

C. Cloud Provider Interface

D. Container Storage Interface

E. Cloud Storage Implementation

F. Container Network Interface

Answer: A D F

Explanation:

VCF 9.0 explicitly lists the components that run in a VKS cluster and groups them into areas such as authentication/authorization, storage integration, pod networking, and load balancing. In that list, the documentation names: “Container Storage Interface Plugin” (a paravirtual CSI plug-in that integrates with CNS through the Supervisor), “Container Network Interface Plug-in” (a CNI plugin that provides pod networking), and “Cloud Provider Implementation” (supports creating Kubernetes load balancer services).

These three items map directly to the answer choices D (Container Storage Interface),F (Container Network Interface), and A (Cloud Provider Implementation). The same VCF 9.0 section also mentions an authentication webhook, but that component is not offered as a selectable option in this question, so the best three matches among the provided choices are the CSI, CNI, and cloud provider implementation entries that the document explicitly states are present inside a VKS cluster.

3.An administrator had deployed a Supervisor cluster on vSphere in a mult-zone-enabled environment and now wants to create a zonal vSphere Namespace so that workloads can be scheduled across zones.

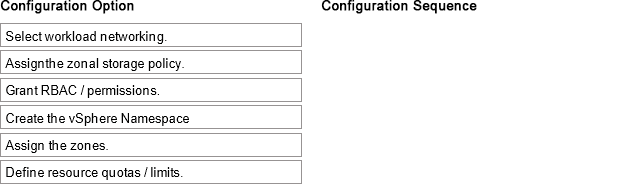

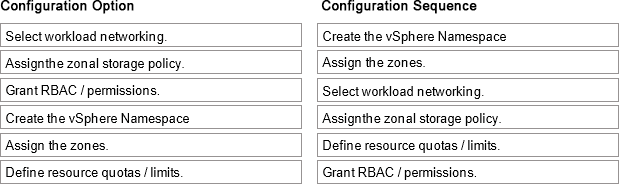

Drag and drop the six actions into the correct order from Configuration Option list on the left and place them Into the Configuration Sequence on the right. (Choose six.)

Answer:

Explanation:

Configuration Sequence (in order):

Create the vSphere Namespace

Assign the zones

Select workload networking

Assign the zonal storage policy

Define resource quotas / limits

Grant RBAC / permissions

A zonal vSphere Namespace is created as a standard namespace first, then “zonalized” by associating it with one or morev Sphere Zones so workloads can be scheduled according to zone placement rules. You start by creating the namespace because it is the tenancy and governance container where networking, storage access, quotas, and permissions are applied. Next, you assign the zones, since zone association is what makes the namespace “zonal” and determines where Kubernetes workloads (and their node pools) are allowed to land.

With zones set, you configure workload networking, because namespaces must have the correct network attachment and IP behavior for the workloads that will be placed across the selected zones. Then you assign the zonal storage policy, ensuring that persistent volumes can be provisioned using storage that is valid /available for the zone placement model you selected. After networking and storage access are defined, you set resource quotas/limits (CPU, memory, storage) so multi-tenant consumption stays within governance boundaries. Finally, you grant RBAC/permissions so the right DevOps/users can consume the namespace and provision clusters/workloads under the enforced controls.

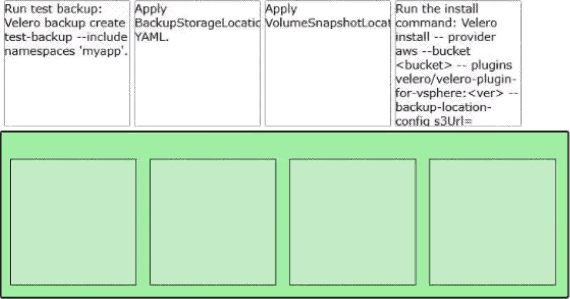

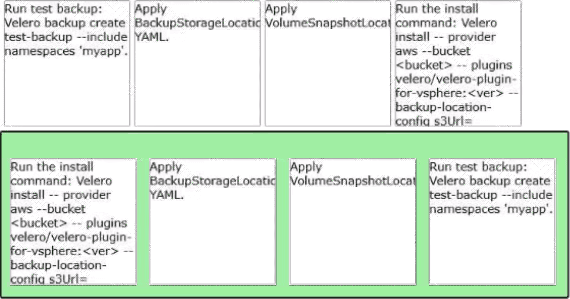

4.A VMware Administrator is tasked with implementing a backup and restore strategy using Velero and external object storage for the namespace ‘myapp1. Arrange the steps In the correct order of operations to enable Vetero.

Answer:

Explanation:

Answer (Correct Order):

Run the install command: velero install … –provider aws –bucket <bucket> … –plugins … –backup-location-config …

Apply BackupStorageLocation YAML.

Apply VolumeSnapshotLocation YAML.

Run test backup: velero backup create test-backup –include-namespaces “myapp1”

The correct sequence follows Velero’s operational model: install the Velero components first, then define where backups and snapshots are stored, and finally validate with a real backup. In VCF 9.0, the Velero Plugin for vSphere installation command includes parameters for the object-store provider, bucket, and plugin images, which establishes the Velero control plane in the target namespace and prepares it to communicate with an S3-compatible store.

After the installation is in place, you apply theBackupStorageLocationconfiguration so Velero has a durable destination for Kubernetes backup metadata in the object store. This aligns with the VCF 9.0 guidance that backups upload Kubernetes metadata to the object store and require S3-compatible storage for backup/restore workflows.

Next, apply theVolumeSnapshotLocationso Velero knows how and where to create/track volume snapshots for stateful workloads. The VCF 9.0 install example explicitly includes snapshot/backup location configuration parameters, reflecting that both must be set for complete protection.

Finally, run a test backup scoped to the namespace (–include-namespaces=my-namespace) to confirm end-to-end functionality.

5.A VKS administrator is tasked to leverage day-2 controls to monitor, scale, and optimize Kubernetes clusters across multiple operating systems and workload characteristics.

What two steps should the administrator take? (Choose two.)

A. Configure namespace quotas to set resource limits for CPU, memory, and storage.

B. Disable Cluster Autoscaler to ensure resources in the pool are not depleted.

C. Deploy Prometheus and Grafana to collect and display scrapeable metrics on nodes, pods, and applications.

D. Set all VM Class limits to Compute Heavy to ensure worker nodes get all the resources needed.

E. Ensure all node pools use the same Machine Deployment configuration for different workload characteristics.

Answer: A C

Explanation:

VCF 9.0 describes a vSphere Namespace as the control point where administrators define resource boundaries for workloads, explicitly stating that vSphere administrators can create namespaces and “configure them with specified amount of memory, CPU, and storage,” and that you can “set limits for CPU, memory, storage” for a namespace. This directly supports step Aas a day-2 control to keep multi-tenant clusters governed and prevent resource contention across different teams and workload types.

For monitoring and optimization, VCF 9.0 explains that day-2 operations include visibility into utilization and operational metrics for VKS clusters, noting that application teams can use day-2 actions and gain insights

Into CPU and memory utilization and advanced metrics (including contention and availability) for VKS clusters. In addition, VCF 9.0 monitoring guidance for VKS clusters states that Telegraf and Prometheus must be installed and configured on each VKS cluster before metrics and object details are sent for monitoring, and that VCF Operations supports metrics collection for Kubernetes objects (namespaces, nodes, pods, containers) via Prometheus. Since the Prometheus stack commonly includes Grafana dashboards for visualization, deploying Prometheus + Grafana matches the required monitoring/optimization outcome inC.

6.An administrator is updating a VMware vSphere Kubernetes Service (VKS) cluster by editing the cluster manifest. When saving, there is no indication that the edit was successful.

Based on the scenario, what action should the administrator take to edit and apply changes to the manifest?

A. Define the KUBE_EDITOR or EDITOR environment variable.

B. Verify the account editing the cluster manifest has appropriate permissions.

C. Ensure the file permissions are set to read-write.

D. Restart the VKS services and edit the file again.

Answer: A

Explanation:

The documented behavior of kubectl edit is that it opens the object manifest in a local text editor, and the editor it launches is controlled by environment variables. In the VCF 9.0 documentation (Workload Management / Supervisor operations), the procedure explicitly states: “The kubectl edit command opens the namespace manifest in the text editor defined by your KUBE_EDITOR or the EDITOR environment variable.”

If the administrator’s environment does not have KUBE_EDITOR or EDITOR defined (or they point to an invalid/interactive-less editor in the current session), kubectl edit may not open the expected editor workflow, and the admin may not see the normal confirmation after saving and exiting. Setting KUBE_EDITOR (preferred for kubectl-specific behavior) or EDITOR ensures kubectl launches a known-good editor, allowing the manifest to be modified, saved, and then submitted back to the API server when the editor exits. The same documented requirement also applies to editing other Kubernetes objects (such as a VKS cluster manifest) because the kubectl edit mechanism is identical.

7.Which feature in VMware vSphere Kubernetes Service (VKS) provides vSphere storage policy integration that supports provisioning persistent volumes and their backing virtual disks?

A. Cloud storage provider

B. vSphere Cloud Native Storage (CNS)

C. Container Storage Interface (CSI)

D. Cloud storage implementation

Answer: B

Explanation:

VCF 9.0 describes Cloud Native Storage (CNS) on vCenteras the component that implements “provisioning and lifecycle operations for persistent volumes.” When provisioning persistent volumes, CNS “interacts with the vSphere First Class Disk functionality to create virtual disks that back the volumes,” and it “communicates with Storage Policy Based Management to guarantee a required level of service to the disks.” This is exactly the storage-policy-to-backed-virtual-disk relationship the question is testing: storage policies (via policy-based management) define requirements, and CNS is the vCenter-side service that applies those requirements while creating and managing the backing storage objects.

In contrast,CSI (including Supervisor CNS-CSI and VKS pvCSI) is the Kubernetes-facing interface/driver used to request and consume storage, but it does not “provide” the vSphere storage policy system; rather, it relies on CNS/CNS-CSI and vCenter services to fulfill provisioning requests. Therefore, vSphere Cloud Native Storage (CNS)is the correct choice.

8.An administrator is deploying a vSphere Supervisor with NSX.

What will determine the deployment size for the load balancer?

A. The Edge node form factor.

B. The Edge cluster form factor.

C. The number of Kubernetes pods that will be deployed.

D. The number of vSphere Kubernetes clusters deployed.

Answer: A

Explanation:

VCF 9.0 design guidance for the Supervisor NSX Load Balancer model states that the NSX load balancers run on NSX Edges, and sets explicit sizing requirements at the Edge node level. In the “NSX Load Balancer Design Requirements,” VCF 9.0 requires that the NSX Edge cluster must be deployed because “NSX Load Balancers run on NSX Edges.” It further requires that “NSX Edge nodes must be deployed with a minimum of Large form factor “because NSX load balancers have fixed resource allocations on NSX Edge nodes, and the Large form factor is needed to accommodate basic system and workload needs.

This directly ties “deployment size” of the load balancer service capacity to the Edge node form factor(Small /Medium/Large, etc.) rather than the number of pods or the number of clusters. The document also notes that if additional load balancers are required, NSX Edges can be scaled up or out, again reinforcing that sizing is an Edge node sizing decision.

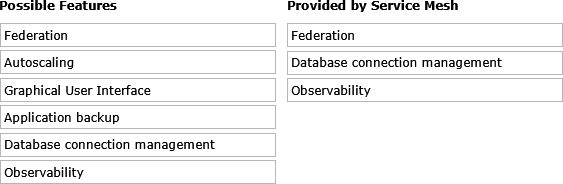

9.Drag and drop the three features into the correct order from Possible Features list on the left and place them into the Provided by Service Mesh on the right side. (Choose three.)

Answer:

Explanation:

Provided by Service Mesh (choose three, in order):

Federation

Graphical User Interface

Observability

A service mesh is an application networking layer that manages service-to-service communication across Kubernetes clusters, providing consistent connectivity, policy enforcement, and visibility without requiring application code changes. Federation is a service-mesh capability because modern meshes (especially multi-cluster/enterprise implementations) can connect services across multiple clusters and environments, enabling shared identity, cross-cluster service discovery, and uniform policy application (often described as multi-cluster or federated service connectivity). A Graphical User interface is commonly provided alongside the service mesh platform to centrally configure policies (traffic routing, access controls, security settings) and to visualize service topology and health. Observability is a core service-mesh outcome: by inserting sidecar proxies (or equivalent data plane components) into the data path, the mesh can generate consistent metrics, logs, and distributed traces for service traffic, enabling latency/error monitoring and dependency mapping.

The other options are not service-mesh features: Autoscaling is handled by Kubernetes/HPA and metrics pipelines, application backup is typically provided by backup tools (e.g., Velero-like solutions), and database connection management is handled by application frameworks or database proxies rather than the service mesh itself.

10.An architect is working on the data protection design for a VMware Cloud Foundation (VCF) solution. The solution consists of a single Workload Domain that has vSphere Supervisor activated. During a customer workshop, the customer requested that vSphere Pods must be used for a number of third-party applications that have to be protected via backup.

Which backup method or tool should be proposed by the architect to satisfy this requirement?

A. Standalone Velero with Restic.

B. vCenter file-based backup.

C. Velero Plugin for vSphere.

D. vSAN Snapshots.

Answer: C

Explanation:

VCF 9.0 distinguishes between backing up the Supervisor control plane and backing up workloads that run on the Supervisor, including vSphere Pods. In the “Considerations for Backing Up and Restoring Workload Management” table, the scenario “Backup and restore vSphere Pods” explicitly lists the required tool as “Velero Plugin for vSphere”, with the guidance to “Install and configure the plug-in on the Supervisor.”

The same document is explicit that standalone Velero with Restic is not valid for vSphere Pods, stating: “You cannot use Velero standalone with Restic to backup and restore vSphere Pods. You must use the Velero Plugin for vSphere installed on the Supervisor.”

vCenter file-based backup is documented for restoring the Supervisor control plane state, not for backing up and restoring vSphere Pod workloads themselves. Therefore, to meet the requirement to protect third-party applications running as vSphere Pods, the architect should propose the Velero Plugin for vSphere.

Get the Full 3V0-24.25 Practice Exam

👉 Get the complete version here:

[Download the Latest 3V0-24.25 Practice Exam – Full Version Access]

Don’t leave your advanced VMware certification to chance. Prepare smart, practice efficiently, and pass the 3V0-24.25 exam with confidence.

LEAVE A COMMENT