59. 1. Topic 1, Contoso Case

Case Study

Transactional Date

Contoso has three years of customer, transactional, operation, sourcing, and supplier data comprised of 10 billion records stored across multiple on-premises Microsoft SQL Server servers. The SQL server instances contain data from various operational systems. The data is loaded into the instances by using SQL server integration Services (SSIS) packages.

You estimate that combining all product sales transactions into a company-wide sales transactions dataset will result in a single table that contains 5 billion rows, with one row per transaction.

Most queries targeting the sales transactions data will be used to identify which products were sold in retail stores and which products were sold online during different time period. Sales transaction data that is older than three years will be removed monthly.

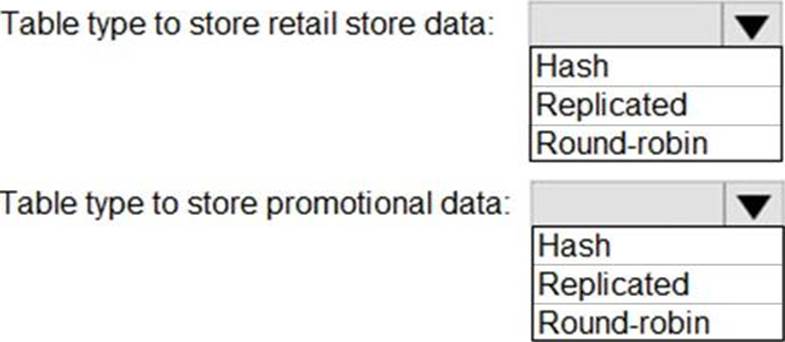

You plan to create a retail store table that will contain the address of each retail store. The table will be approximately 2 MB. Queries for retail store sales will include the retail store addresses.

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The table will be approximately 5 GB.

Streaming Twitter Data

The ecommerce department at Contoso develops and Azure logic app that captures trending Twitter feeds referencing the company’s products and pushes the products to Azure Event Hubs.

Planned Changes

Contoso plans to implement the following changes:

* Load the sales transaction dataset to Azure Synapse Analytics.

* Integrate on-premises data stores with Azure Synapse Analytics by using SSIS packages.

* Use Azure Synapse Analytics to analyze Twitter feeds to assess customer sentiments about products.

Sales Transaction Dataset Requirements

Contoso identifies the following requirements for the sales transaction dataset:

• Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by month. Boundary values must belong: to the partition on the right.

• Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

• Implement a surrogate key to account for changes to the retail store addresses.

• Ensure that data storage costs and performance are predictable.

• Minimize how long it takes to remove old records. Customer Sentiment Analytics Requirement

Contoso identifies the following requirements for customer sentiment analytics:

• Allow Contoso users to use PolyBase in an Aure Synapse Analytics dedicated SQL pool to query the content of the data records that host the Twitter feeds. Data must be protected by using row-level security (RLS). The users must be authenticated by using their own AureAD credentials.

• Maximize the throughput of ingesting Twitter feeds from Event Hubs to Azure Storage

without purchasing additional throughput or capacity units.

• Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files.

• Ensure that the data store supports Azure AD-based access control down to the object level.

• Minimize administrative effort to maintain the Twitter feed data records.

• Purge Twitter feed data records;itftaitJ are older than two years.

Data Integration Requirements

Contoso identifies the following requirements for data integration:

Use an Azure service that leverages the existing SSIS packages to ingest on-premises data into datasets stored in a dedicated SQL pool of Azure Synaps Analytics and transform the data.

Identify a process to ensure that changes to the ingestion and transformation activities can be version controlled and developed independently by multiple data engineers.

HOTSPOT

You need to design an analytical storage solution for the transactional data. The solution must meet the sales transaction dataset requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

LEAVE A COMMENT